Arabic

Automatically Convert Program Audio into Real-Time Closed Captions and Subtitles!

Reach a Wider Audience!

BroadStream’s VoCaption software uses Automatic Speech Recognition technology to create real-time captions that are reliable and accurate and deliver real-time cost-savings for broadcasters with an improved experience for your viewers.

VoCaption is live automated closed captioning software that supports broadcasters in various industries:

- Commercial broadcasting

- Government

- Public Access

- Religious Entities

- Educational Centers

- Corporate Broadcasting

VoCaption makes live captioning a “one mouse click” operation that takes the frustration out of hiring human captioners and streamlines your workflow. No matter your industry, VoCaption is real-time captioning software that provides peace of mind.

Reliable Closed Captioning for Scheduled Live Programming or Emergency Broadcasts

VoCaption live captioning software reduces the worry typically associated with producing live closed captions and maintaining accuracy or reliability.

- Automatic conversion of audio to text in real time.

- Custom vocabulary tools to improve accuracy.

- Alternate word list / profanity filter.

- Available 24/7 for emergencies or breaking news.

- No scheduling issues.

- No need to pay for a full hour of labor if only a few minutes are needed.

Why Caption Live Broadcasts?

All live programming should be captioned, and here’s why.

Local regulations, laws or guidelines governing accessibility, which can vary by country or community. As an example, in the US, government regulations require cable operators, broadcasters, satellite distributors and other multi-channel video programming distributors to provide closed captioning on most live programming. Failure to follow these regulations can result in government fines or other sanctions.

On top of federal guidelines, broadcasters also have a moral obligation to make their programming accessible to everyone. Closed captioning:

- Makes programming more accessible to individuals who are deaf or hard of hearing

- Improves the viewing experience and comprehension for those in noisy environments

- Helps viewers whose primary language is different than the language being broadcast

- Supplements speakers with accents that may be harder to understand for some.

- Enhances breaking news or emergency situations related to weather, violence or traffic related issues.

Next Generation Technology – ASR

Speech-to-text technology or Automatic Speech Recognition (ASR) has improved dramatically over the last few years and delivers accuracy for live closed captioning that is comparable to a live human captioner in many situations and genres.

You can now leverage this technology and reap significant savings and benefits using VoCaption as your primary or back-up captioning solution.

Closed Captioning Improves Comprehension and Retention

The modern world is active and filled with noise and distraction. It’s common to see people doing multiple tasks at the same time…travelling, cooking, exercising, etc. People also watch while using a second screen. Often, individuals are in places that are loud and crowded, or areas where they must respect a level of quiet.

What does this mean for live programming?

- People can’t always listen to the audio while they watch the video.

- Live closed captions allow viewers to follow along with the information being shared.

- Live closed captioning also makes the information being shared more retainable. Did you know that studies show that people retain information better when they hear it and read it together?

- It also helps individuals to pick up words that they didn’t understand when spoken, and reinforces the information being shared.

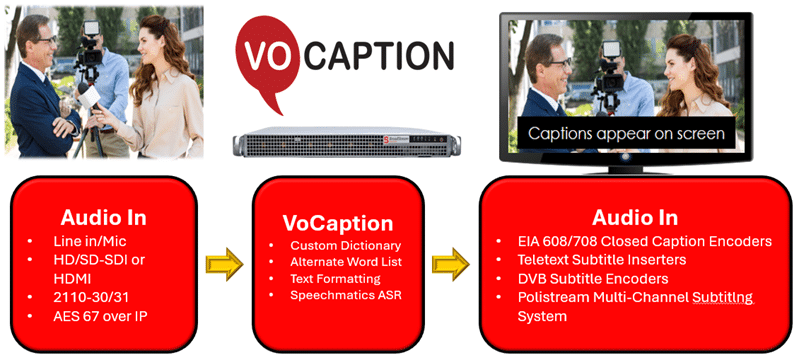

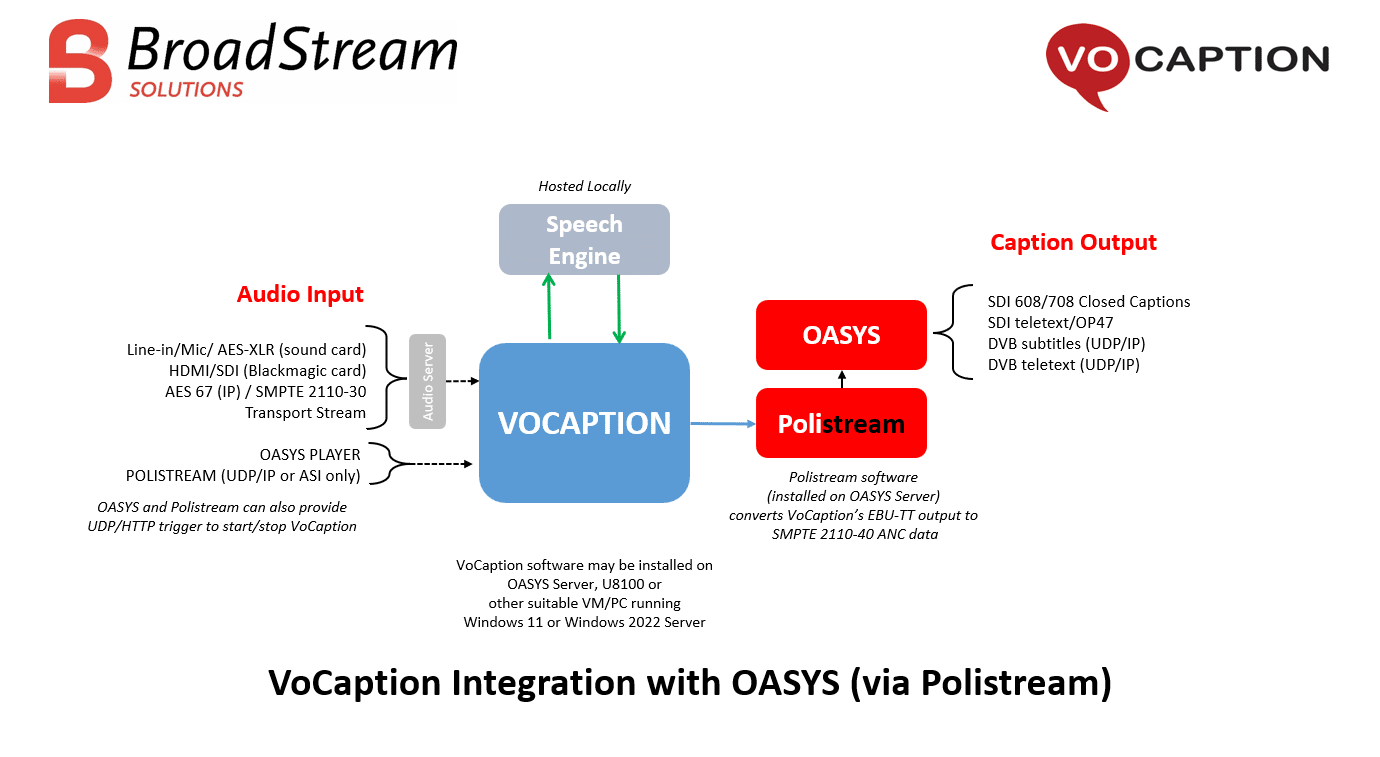

How VoCaption Creates Automated Real-time Closed Captioning

- VoCaption uses Automated Speech Recognition to convert the spoken word from program audio into the text you see on the screen.

- Industry leading accuracy with ultra-low latency, and support for over 50 languages.

- Accuracy is improved through a custom dictionary for supplemental or program specific vocabulary, such as proper nouns (people and place names) and specialist terminology.

- A Substitution List provides a profanity filter and ensures consistent presentation.

- Output options include EIA 608/708 closed captions, teletext/OP47 subtitles, DVB bitmap or teletext subtitles, SCTE-27 and open (burnt-in) subtitles – delivered via Polistream, OASYS, Broadcast Pix or a suitable third-party caption/subtitle encoder.

- Delivered captions are saved to file for re-broadcast, VOD delivery or archiving purposes. These can also be used to harvest metadata to improve search within a media library and for social media and SEO improvements.

- Saved files can be given a human review and corrected as needed to ensure perfect captions for future uses.